AMD released its Radeon Software Adrenalin Edition 18.4.1 drivers a few days ago. In the release notes there was one stated significant addition to the drivers: “Initial support for Windows 10 April 2018 Update”. Just that, a few fixed issues, and a list of known issues AMD is currently working on. However, it has since been discovered that AMD slipped in an extra feature that may be of great appeal to PC users, especially those owning / making HTPC machines – support for Microsoft's PlayReady 3.0 DRM.

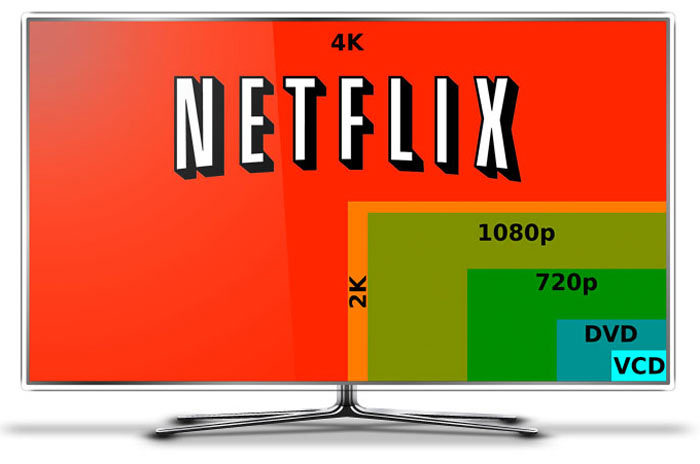

The importance of the above is that Microsoft's PlayReady 3.0 is one of the system requirements for Netflix 4K playback on a PC. Rival chipmakers have been on board with decoding this secured content for several months. Nvidia introduced the capability for GTX 1050 or better (at least 3GB of video memory) owners in GeForce driver version 387.96 in 2017. Intel Kaby Lake CPU, and newer, users have also been able to watch Netflix 4K on their PCs accelerated by the Intel IGP since late 2016.

Hardware.info noticed (via HardOCP) the AMD Netflix 4K decode ability had been added when perusing a reviewer’s guide document for Raven Ridge APUs. As with the Intel and Nvidia alternative routes, some other conditions must be met for Netflix 4K playback on the PC. Users need the PlayReady 3.0 compatible hardware plus; the Microsoft Edge browser, a connection to the monitor via the hdcp 2.2 protocol, an existing h265 decoder, and a Netflix Premium subscription.

In the Dutch source’s own testing it was noted that Netflix 4K video streaming came with a considerably higher bit rate than lower resolutions, which is understandable. Other than the demands on its internet connection, Hardware.info had no other issues watching Netflix 4k via a Radeon RX580 video card using the latest Adrenalin driver.

Skipping back to the Adrenalin 18.4.1 driver release notes, it is noted as a known issue that Netflix can stumble on multi-GPU systems using Radeon RX 400 series or Radeon RX 500 series products.