Asus launched its highly anticipated ROG Swift PG27UQ 27-inch 4K 144Hz G-Sync monitor around a month ago, with availability set for this month. These flagship green team friendly gaming monitors are still on pre-order, at least here in the UK, but tech site PCPer is lucky enough to have had one through its test labs.

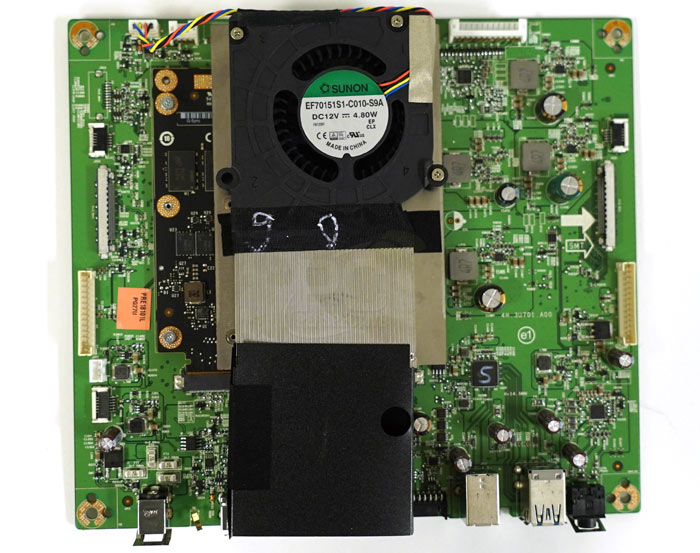

The interesting five page review over at PCPer goes into quite some depth, on page two you will even find a teardown and component analysis of the $1999 gaming product from Asus ROG.

At the heart of the display is the M270QAN02.2 display panel from AU Optronics. For the greatest contrast this LCD panel uses a 384-zone FALD (full-array, local dimming) backlight - this helps deliver the best black levels possible with pixels not in use turned off, but not matching the granularity of OLED tech, of course.

The next major component worth attention is the new G-Sync module Nvidia has designed for these G-Sync HDR displays. The previous module was well know to add a couple of hundred dollars to the monitor price, but with this new generation module it could be adding $500+ to the total bill of materials (BOM).

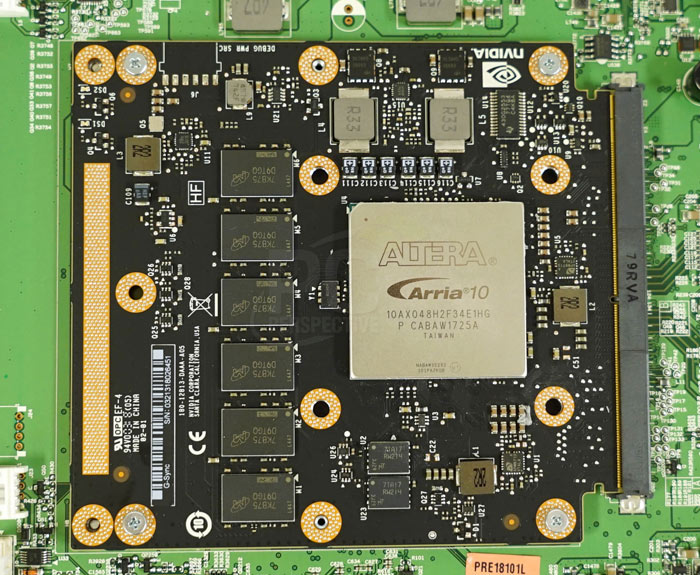

PCPer notes that the G-Sync HDR module packs costly sub-components including an Intel FPGA and 4GB of DDR4-2400 RAM. (The first gen G-Sync module has 768MB of memory.) Its investigations found that the Intel Altera Arria 10 GX 480 FPGA is sold to private and low volume customers at $2600, so it thinks it is reasonable that a larger customer like Nvidia/Asus might spend $500 on the same programmable chip.

With the cooler assembly removed you can clearly see the Intel FPGA chip and Micron DDR4 RAM chips

Despite the expense lavished on the components in these Nvidia G-Sync HDR gaming displays there have been reports of disappointment from the early adopters of the Asus ROG Swift PG27UQ, and the Acer Predator X27. Due to the limits of the DIsplayPort 1.4 interface they suffer from "a noticeable degradation in image quality," when running at their top quoted specs of 4K resolution at peak 144Hz refresh. The optimal setting is said to be with the refresh rate dialled down to 120Hz, using 8 bit per pixel colour depth, so the YCbCr 4:2:2 chroma subsampling doesn't kick in.