Researchers from the California Institute of Technology (Caltech) science and engineering research and education institution have developed a tiny new 3D imaging device. The Nanophotonic coherent imager (NCI) device may hold the potential to allow device makers to implement 3D scanners in smartphones or wearables without significant cost.

The technology used by the imaging device to capture the 3D image data is based upon LIDAR (Light Detection and Ranging). Caltech's NCI illuminates an object with peak and trough wavelength aligned 'coherent light' and analyses the light waves being reflected by the object to create models with height, width, and depth information.

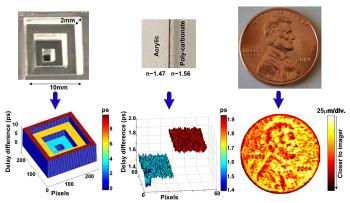

The NCI in testing was very small, less than a millimetre square, says Engadget. It only contained a 4x4 array of coherent pixels so can only scan small items right now. Even with the small coin, as pictured, it needed multiple passes to capture a complete scan. However it is impressively accurate down to 15μm depth resolution and 50μm lateral resolution (limited by the pixel spacing).

The Caltech researchers say that it would be easy to scale up the sensor with hundreds of thousands of pixels. This NCI could, in the near future, suit a diverse range of tech which would make use of precise 3D imaging from scanners associated with 3D printers, bio-medical imaging, security, robotics and self driving car sensors.