Introduction

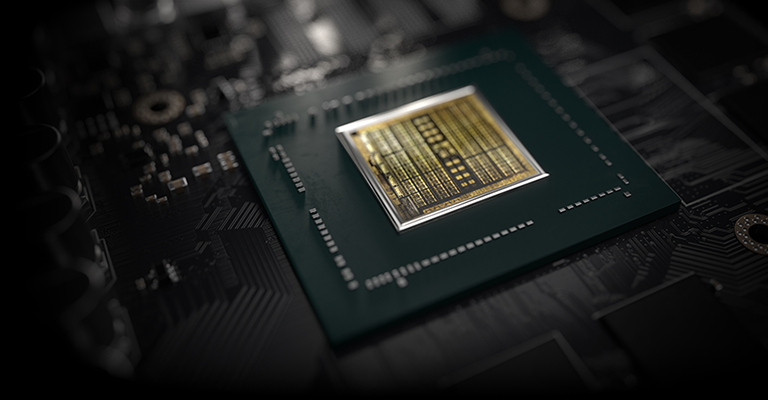

The launch of the GeForce GTX 1660 graphics card is important for Nvidia because it brings the latest-generation Turing architecture down to a £199 price point. The GPU design is also interesting insofar as it harvests plenty of the back-end technology present on older cards - 6GB GDDR5 memory operating at 8Gbps and interfacing via a 192-bit bus is the same as popular GTX 1060, for example. What's new, of course, is the more efficient Turing cores and refined 12nm manufacturing process.

Nvidia thinks this Turing architecture and an increase in the number of cores ought to be enough to propel the GTX 1660 into the next performance league up from, say, GeForce GTX 1060; the GPU it effectively replaces in the midrange stack. On paper, such statement ring true, and it's instructive to pragmatically evaluate how GTX 1660 improves not only upon its predecessor but also an older x60 card such as GeForce GTX 960.

GeForce GTX 1660 vs. GTX 1060 vs. GTX 960 |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

| GPU name | GTX 1660 |

GTX 1060 |

GTX 960 |

||||||

| Launch date | Mar 2019 |

July 2016 |

January 2015 |

||||||

| Codename | TU116 |

GP106 |

GM206 |

||||||

| Architecture | Turing |

Pascal |

Maxwell |

||||||

| Process (nm) | 12 |

16 |

28 |

||||||

| Transistors (bn) | 6.6 |

4.4 |

2.9 |

||||||

| Die Size (mm²) | 284 |

200 |

227 |

||||||

| Base Clock (MHz) | 1,530 |

1,506 |

1,126 |

||||||

| Boost Clock (MHz) | 1,785 |

1,708 |

1,78 |

||||||

| Shaders | 1,408 |

1,280 |

1,024 |

||||||

| GFLOPS | 5,027 |

4,372 |

2,416 |

||||||

| Memory Size | 6GB |

6GB |

2GB |

||||||

| Memory Bus | 192-bit |

192-bit |

128-bit |

||||||

| Memory Type | GDDR5 |

GDDR5 |

GDDR5 |

||||||

| Memory Clock | 8Gbps |

8Gbps |

7Gbps |

||||||

| Memory Bandwidth | 192 |

192 |

112 |

||||||

| ROPs | 48 |

48 |

32 |

||||||

| Texture Units | 88 |

80

|

64 |

||||||

| Power Connector | 8-pin |

6-pin |

6-pin |

||||||

| TDP (watts) | 120 |

120 |

120 |

||||||

| Launch MSRP | $219 |

$249 |

$199 |

||||||

Analysis

Launch price, quoted in US dollars, highlights that the comparison is just and valid. The question then becomes one of understanding the specification improvements in the 32-month lag between GTX 1060 and GTX 1660.

Knowing boost clocks are pretty similar means that GFLOPS throughput increases more or less in line with extra cores, which the GTX 1660 has exactly 10 per cent more of. Hardly earth-shattering, is it? Nvidia's response would be that Turing Cuda cores can do more work than Pascal, primarily through a combination of concurrent integer and floating-point execution alongside smarter, deeper caching. There's also Turing-only variable-rate shading, now adopted by DX12, offering another uptick in potential performance.

And it's at the top end of the GPU that performance gains will be most readily made, because the back end, as we have alluded to, is eerily similar. Nvidia is therefore offering between 10-20 per cent more performance for the same power budget and, dollar to dollar, a 12 per cent smaller financial outlay in nominal terms. Enough for 2.5 years of development? Good question.

One can argue that GeForce GTX 1060 was an outlier GPU because it packed so much performance in for a relatively modest sum. Going back another generation, to GTX 960 - Nvidia says the GeForce install base is still predominantly from this era - shows that both GTX 1060 and GTX 1660 are considerably more powerful. Looking at the 1660 vs. 960 specs suggests bountiful improvement in four years, to the tune of 250 per cent in certain games titles.

Moving away from speeds and feeds, we'd like to see a $219 (£200) graphics card of 2019 be able to play GPU-heavy games at fluid and smooth FHD framerates allied to stunning image quality - you know, Far Cry 5, Shadow of the Tomb Raider, The Division 2, and the like. Having QHD60 would be a bonus. The interesting aspect to note is how GTX 1060 and GTX 960 match up.

As is the norm these days, Nvidia's partners release a multitude of cards based on a GPU, separated by the quality of the cooler, ancillary features, and a minor overclock to the core. The truth is there's little to distinguish the best, most expensive card from the cheapest model of a particular class of GPU, in pure frames-per-second benchmarks at least. Our own research shows that it's mighty difficult to improve upon an entry-level GeForce's performance by more than five per cent, and even if you do so, pricing spirals so far north as to impinge on the next GPU up, one that's a fair bit faster by default.

This thinking is why we'd like to see a GeForce GTX 1660 hit that £200 price point, be presented in a small-ish form factor, perhaps have a minor overclock in place, and be reasonably cool and quiet. Hoping to hit all these checkboxes is the Gigabyte GeForce GTX 1660 OC, so let's take a quick peek.

Gigabyte GeForce GTX 1660 OC (GV-N1660OC-6GD)

No need for GeForce GTX 1660 to be a large card, right?

Simplicity is the name of the game here. Measuring 224mm (l) x 121mm (d) x 40mm (h), the twin-fan OC model looks and feels like a midrange card. A few points to note. The fans spin in opposite directions, to minimise turbulence, and push air out of the top of the card. This means that plenty will be recirculated into the chassis. The duo switches off at low loads, which is normally considered a positive, yet when they do spin up, the ramp is quick, severe and noticeable - akin to revving. We now believe it's best if such cards kept their fan on at all times, minimising this revving and keeping the GPU cool(er) when idling.

The heatsink itself is nice and simple. A single heatpipe makes its way through a large metal plate and, flattened at the centre, has direct contact with the baby TU116 GPU. This large plate concurrently cools the GDDR5 memory, while Gigabyte includes basic thermal tape for the VRMs. The card is encased in a rear plastic heatsink whose only job is to keep the topside cooler attached firmly; there are no meaningful components to cool on the backside so there's no need for thermal transfer pads or extravagant heatsinks.

Outputs are perhaps too modern? Triple DisplayPort and a single HDMI

Being an entry-level model, where hitting a keen price point is paramount, leaves no room for RGB lighting. The card is no worse off for it in our opinion, and sensibly Gigabyte sticks to an 8-pin power connector. It's surprising, and pleasing, that the OC lives up to its name by increasing the default engine core speed from 1,785MHz to 1,830MHz, with real-world frequency averaging 1,930MHz inside our test chassis. Perusing other GTX 1660 cards shows that even the most expensive (£250) models have a specified boost speed of only 1,860MHz, and all, like the review model, feature standard 8Gbps GDDR5 memory - no partner is adventurous enough to overclock the RAM. It's also handy to know that Gigabyte provides a three-year warranty on its graphics cards.

Unlike rival AMD, Nvidia is not providing a gaming bundle with the GTX 1660 at present. Anyhow, let's get this generational comparison started.