Display, Encoder And SLI Improvements

It makes sense to overhaul the display and video technology when bringing a new generation of GPUs to market. Nvidia has done exactly this for Turing.

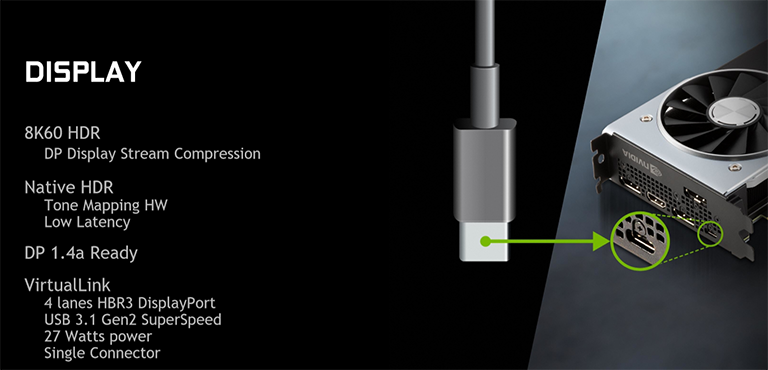

DisplayPort has been upgraded from 1.4 to 1.4a, or, more precisely, the architecture is DP1.4a-ready. What this means in a current context is that it will support 8K60 resolutions on at least two outputs, plus have Vesa's Display Stream Compression technology baked in. DSC is interesting insofar as it appears to be a near-lossless compression technology necessary for the higher bit-depth associated with native HDR. Turing also carries tone-mapping hardware support through the pipeline, which effectively translates HDR content into SDR for regular displays.

Nvidia has also looked at the whole VR setup and reckoned it was time to make connection easier for headsets. Currently, an HDMI cable is needed to connect the card to the VR headset and then a USB cable back to the PC. That's three cables on, say, the Oculus Rift - HDMI and two USB 3.0 - so it can become messy.

What you'll notice on the back of all Turing boards is a USB Type-C connector designed for VR purposes. It replaces that aforementioned trio with one cable, capable of both four lanes of HBR3 DisplayPort and 27W of power via USB 3.1 Gen 2. This dual-use technology is called VirtualLink, which is an open industry standard. USB Type-C, on the other hand, can only support dual HBR3 lanes and power. Bear in mind that VirtualLink pulls power from the GPU and raises board juice by up to 35W.

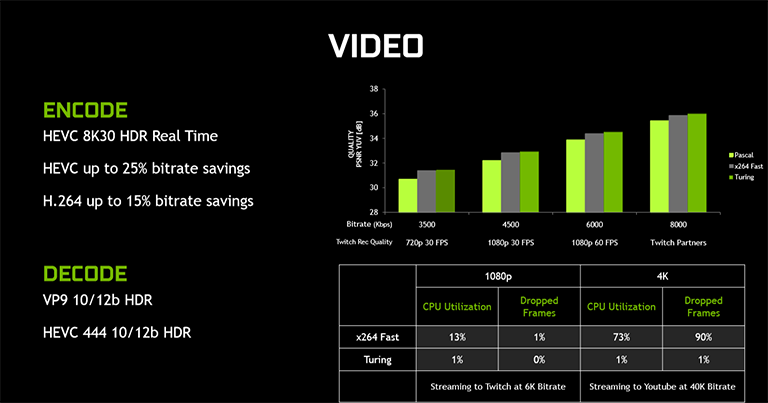

The other bit of improvement rests with the way Turing handles video for its updated NVENC encoder. Though Pascal already features space-saving H.265, Turing adds support for 8K30 HDR encode while the decoder has also been updated to support HEVC YUV444 10/12b HDR at 30 fps, H.264 8K, and VP9 10/12b HDR.

It's interesting to see that Nvidia reckons the new encoder is able to make quite significant bit-rate savings for the same quality level, which will be handy for those streaming out. Plus, with practically no CPU utilisation and very few dropped frames, the GPU, via optimised software (OBS, for example), ought to be able to stream out to various platforms at 4K thus putting high-end capture cards in a bit of a sticky situation.

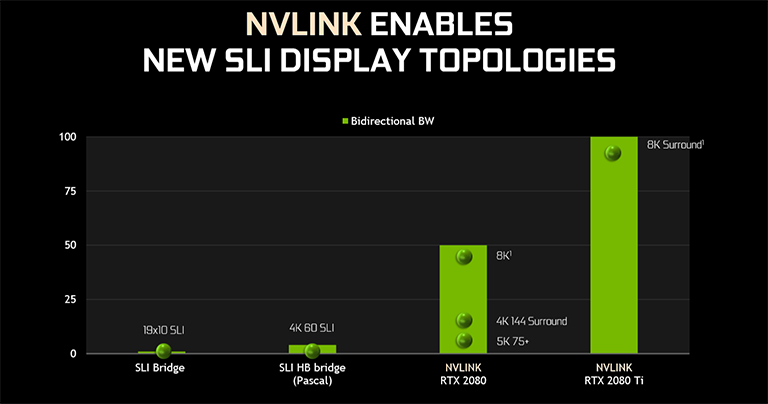

Nvidia has also changed the way in which multiple boards communicate with one another for multi-GPU SLI. Instead of relying on the old-school bridges for inter-GPU transfers, Turing uses either one (TU104) or two (TU102) dedicated NVLinks - a technology first seen in the Nvidia P100 chip. Each second-generation NVLink provides 25GT/s bandwidth between two GPUs, so 50 GT/s bidirectionally, and you can double that for dual NVLinks. Note that Nvidia will only support dual SLI with this new GPU-to-GPU technology, and a compatible RTX NVLink Bridge will cost a whopping $79.

It's pretty easy to see that the older SLI HB bridges were very nearly at the end of the specification for high-res screens. Upping the inter-GPU bandwidth by at least 15x paves the way for esoteric SLI setups without running into data communication problems. High time that Nvidia sorted this obvious multi-GPU issue out.

There is a whole heap more to do with the way the new Turing cards overclock, their power-delivery system, cooling efficiency, and so forth. Those details will be covered in the full card review later on next week. For now, let's actually see what Turing, in its GeForce RTX form, is all about.