Engineers at the University of Illinois Urbana-Champaign and at University of California Los Angeles think it is time to have another attempt at creating a wafer-scale computer. Rekindling such a concept, after the infamous Gene Amdahl founded Trilogy Systems debacle resulted in a financial 'crater', is a brave but possibly timely move for the industry.

Illinois computer engineering associate professor Rakesh Kumar and his collaborators will present their research on a new wafer-scale computer at the IEEE International Symposium on High-Performance Computer Architecture in February later this month. The IEEE Spectrum magazine reports that a wafer-scale computer consisting of as many as 40 GPUs impressed greatly in simulations by the researchers. The computer would include as many as 40 GPUs and, in the simulations, "sped calculations nearly 19-fold and cut the combination of energy consumption and signal delay more than 140-fold," it is claimed.

Prof Kumar explained to IEEE Spectrum that "The big problem we are trying to solve is the communication overhead between computational units". As many HEXUS readers will know, usually supercomputers spread applications over hundreds of GPUs on separate PCBs, communicating over long-haul links. Such links are slow and energy inefficient compared to interconnects within the chip architecture. Thus getting data from one GPU to another creates an "incredible amount of overhead," says Kumar.

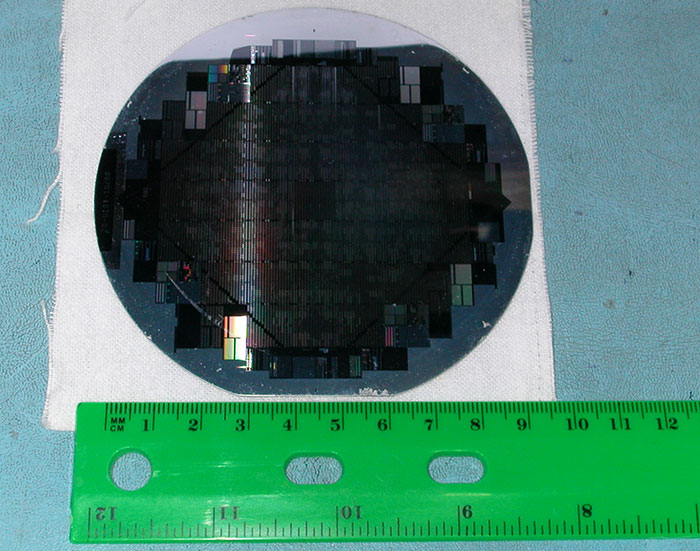

An early Wafer-scale integration attempt by Trilogy Systems. Image from Wikipedia.

Back in the 80s Amdahl tried to simply use standard chip-making techniques to build all 40 GPUs on a single silicon wafer and create interconnects between them - but in practice the defect rates were too high, suggests the IEEE report. Instead, the researchers are adding normal-sized GPU chips that have already passed quality tests to the wafer and leveraging a technology called silicon interconnect fabric (SiIF) on top of this wafer. Smaller SiIF wafers can facilitate interconnects comparable to those on the large wafer and the GPUs are aligned and connected using short copper pillars spaced about 5 micrometers apart. After thermal compression bonding you have the GPUs connected. Using this technique you can have at least 25 times more inputs and outputs on a chip, according to the Illinois and UCLA researchers.

Robert W. Horst of Horst Technology Consulting in San Jose, California, who was instrumental in creating the only waferscale product ever commercialised by Tandem Computers, commented on the SiIF waferscale GPU. Horst welcomed the innovation and agreed that the new technique "overcomes the problems that the early waferscale work was not able to solve". But he warned that cooling would be a challenge with "that much logic in that close proximity". Kumar and his team are working on building a wafer-scale prototype processor system which should provide some insight into any such problems that could arise.

Source: IEEE Spectrum