At the ISC High Performance conference back in June, which focuses upon HPC developments, Intel published a press deck (PDF) trumpeting the appeal of its Knights Landing Xeon Phi processors. Among the compelling qualities of the Xeon Phi, according to Intel, was its use for Deep Learning, and it was shown in the presentation slides to be a top performer at essential 'training' tasks. I have reproduced the slide I am referring to directly below.

The key claims of the slide, and what its graphs try to make immediately clear, is that Xeon Phi outperforms GPUs for deep learning training in three major ways:

- Xeon Phi is 2.3x faster in training than GPUs

- Xeon Phi offers 38% better scaling that GPUs across nodes

- Xeon Phi delivers strong scaling to 128 nodes while GPUs do not

It seems that these claims have hit a sore spot with rival chipmaker Nvidia. In a blog post earlier this week the green team was keen to point out that "newcomer" Intel was using out of date benchmarks. Nvidia says that deep learning is such a fast moving field some companies might not be able to keep up with cutting edge developments. A case in point is that Nvidia's own architecture and software "have improved neural network training time by over 10x in a year by moving from Kepler to Maxwell to today’s latest Pascal-based systems".

Tables are turned – "Fresh vs Stale Caffe"

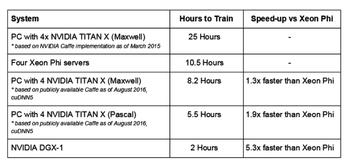

The main focus of the Nvidia blog post is to highlight that Intel has used old versions (March 2015) of publically available benchmark software and if the newest benchmarks were to be used (with software from August 2016) the results would be overturned – Nvidia would be the winner all the way. A chart below shows how much faster Nvidia GPU solutions are using new Caffe AlexNet data.

In another Intel comparison its Xeon Phi is pitted against four-year-old GPUs (Nvidia Tesla K20X) paired with older interconnect technology. Last but not least Nvidia claims that Intel's scaling claims are wrong, as verified by one of its customers, Baidu.

Offering some constructive criticism for Intel, Nvidia thinks that "deep learning testing against old Kepler GPUs and outdated software versions are mistakes that are easily fixed in order to keep the industry up to date". Nvidia's blog post concludes that while it is "great that Intel is now working on deep learning," the company should endeavour to "get their facts straight".