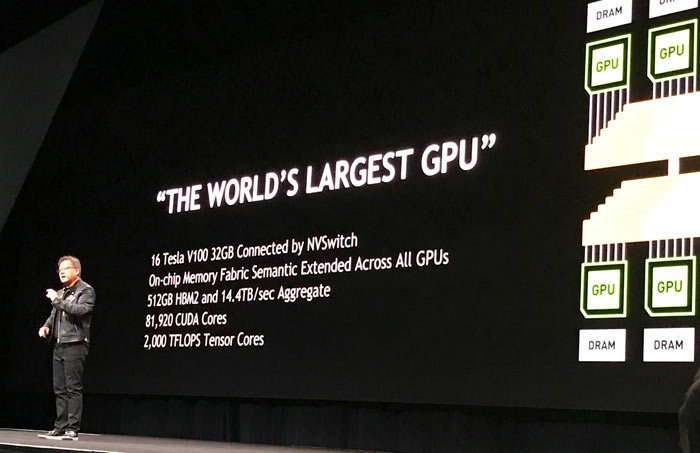

Nvidia's original DGX-1 deep learning computer system packed eight P100 GPU accelerators in a rack (in 2016). In May 2017 Nvidia boosted its DGX-1 by upgrading the 8x Pascal-based GPUs with the new Volta GV100 (16GB) models. Yesterday evening Nvidia announced its DGX-2, which packs 16x fully interconnected Tesla V100 32GB GPUs, as "the world's most powerful AI system."

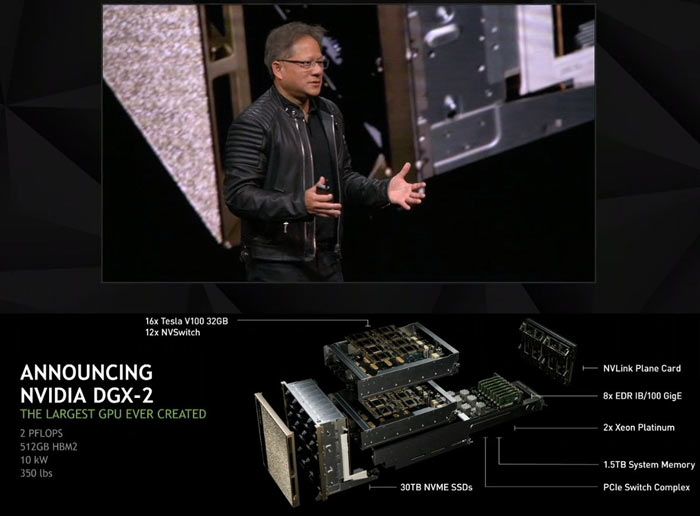

Nvidia's compact new turnkey HPC system delivers 2 petaFLOPS of compute power and is claimed to be able to train 4x bigger models on a single node with 10x the (deep learning) performance of its predecessor. (For reference the DGX-1 was capable of 960 TFLOPs from its 40,960 CUDA cores). The DGX-2 features up to 1.5TB of system memory, 512GB of HBM2, InfiniBand or 100GBe networking, and two Xeon Platinum processors to baby-sit the 16x GPUs (81,920 CUDA cores). In other important metrics, the DGX-2 consumes up to 10kW of electricity, and weights in at 350lbs (159kg).

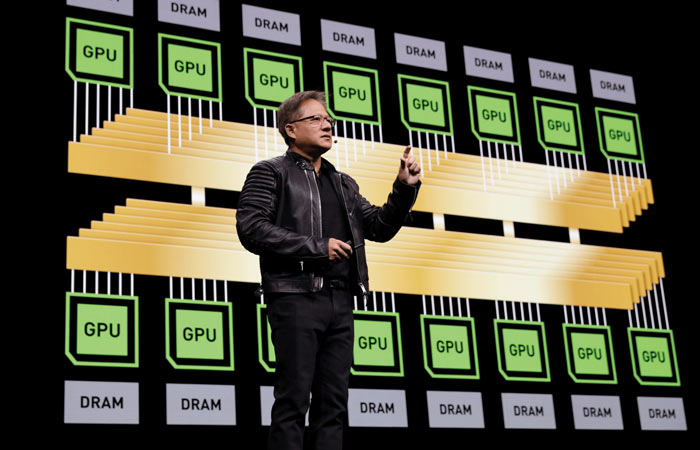

Key to the DGX-2's performance is Nvidia's flexible new GPU interconnect fabric, dubbed 'NVSwitch technology'. Thanks to this new interconnect technology any of the GV100 GPUs can communicate with another in the system at 300GB/s. The 14TB/s of aggregate system bandwidth would be sufficient to transfer 1,400 movies over this switch in one second, simplified Nvidia's CEO, Jensen Huang. Developers can address this unified system of 81,920 CUDA cores and 512GB of HBM2 just like one 'gigantic GPU' using the same software you would with a single GPU system.

Interested parties can already buy one of the new DGX-2 systems for $399,000. It can replace $3m of 300 dual-CPU servers consuming 180 kilowatts, according to Nvidia. Furthermore, the DGX-2 is 1/8th the cost, uses 1/60th of the space, and consumes 18th the power. "The more you buy, the more you save," quipped Huang.