Datacenter race is on

AMD has renewed its focus on the high-margin datacenter market by announcing second-generation Epyc processors at its Next Horizon event in San Francisco today.

The market is worth potentially $29bn - $17bn CPU and $12bn GPU - by 2021, according to Dr Lisa Su, AMD CEO. She believes there will be strong double-digit annual growth for professional graphics cards, while CPU sales are also reckoned to increase at a faster rate than in the consumer field. The real money is in the datacenter.

Launched in June 2017, first-generation Epyc, based on the Zen architecture, tops out at 32 cores and 64 threads for the 7601 model, with two processors installable on an SP3 motherboard. The main thrust for AMD has been lower total cost of ownership compared to performance-comparable Intel solutions. To that end, announced today, underscoring Epyc's infiltration into the datacenter market, Amazon Web Services (AWS) has signed up to deploy a trio of server platforms based on these Epyc processors.

Intel has hit back - ironically, yesterday - with its datacenter-centric Cascade Lake Advanced Performance platform available early next year, housing up to 48 cores on an MCM package equipped with 12 memory channels per chip. The many-core, high-bandwidth datacenter ecosystem race is well and truly on.

Zen 2 - 7nm, wider and faster, and better Infinity Fabric

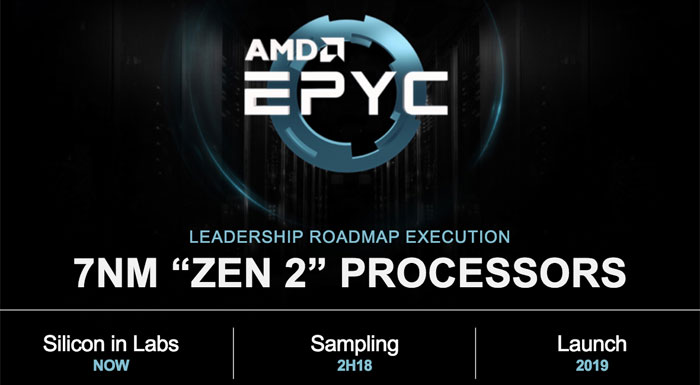

That brings us on nicely to next-generation Epyc that's based on Zen 2 and known by the codename Rome. Mark Papermaster, AMD CTO, announced a few more details regarding the architecture at today's event. The first aspect of note is that Zen 2 will be built on a 7nm process, not half-node 10nm. The reason is simple enough: to enable the best-possible performance for a given socket power level.

AMD has partnered with TSMC and a number of electronic design automation (EDA) partners to bring 7nm to the table, Papermaster said, and it will enable twice the density (smaller chips) and half the power for the same level of performance as 14nm. That enables some big, big gains to be had if harnessed correctly.

What all of this really means is that AMD can add some extra performance via improved microarchitecture - improved execution pipeline, double floating-pipeline ability - and more cores and threads for second-gen Epyc, courtesy of that move to 7nm. Let's take a peek into architectural improvements.

Looking at heavily-vectorised code first, AMD is doubling the floating-point width, to 256 bits, rectifying the obvious deficit that has existed against Intel's Xeon line of chips. Having this extra vector width becomes increasingly important in the HPC space, so its inclusion is sensible and expected, even though first-gen Epyc's general-purpose FP performance was very decent. The downside of this wider-vector approach is increased die space and power requirements of running such parallelisable code, but the move to 7nm ought to ameliorate these concerns.

Doubling of vector width capability and load/store units leads to doubling of floating-point performance, assuming that frequencies are the same, so second-gen Epyc should blaze through well-tuned HPC code.

In concert with enhanced vectorisable code, AMD is improving front-end of the chip by enhancing the branch-prediction unit, pre-fetching capability, and increasing the micro-op cache size. These help in, as Papermaster calls it, 'feeding the beast' by reducing stalls in the pipeline. AMD also increased the dispatch and retire bandwidth to move non-vectorisable code more quickly than first-generation Zen.

Remember we mentioned that Rome-based Epyc chips are based on a 7nm process. That much is true, as the execution units are indeed hewn from that, and AMD new refers to them as chiplets. The accompanying I/O infrastructure is on a different, 14nm die, connected via second-gen Infinity Fabric. The reason for this mixed-process design is that AMD reckons the big interface I/O analogue circuitry doesn't scale well down to 7nm, so why bother using cutting-edge, expensive silicon for it? Interesting.

And this explains why you will see next-gen Epyc chips have multiple 'CPU' die complexes surrounding a central I/O die. Keeping bandwidth high outside of the chip, Rome-based Epyc chips are the first to use PCIe 4.0, doubling the effective bandwidth to various accelerators.

64 cores, 128 threads, quadrupling of floating-point performance

The combination of the 7nm process, general architecture improvements, most notably doubling the floating-point capability, turns second-gen Epyc into something of an HPC monster. Lisa Su revealed that AMD has working silicon that scales to 64 cores and 128 threads per socket, doubling what is presently available in the 32C64T Epyc 7601. What's more, with floating-point doubling, each Rome Epyc chip should be 4x faster at those calculations. Such a chip is comprises eight CPU die complexes, each carrying eight cores, and that ninth die, reserved for centralised I/O. That makes sense because you ought to have identical latency when accessing each core complex.

There's no change in memory support, which remains at eight channels, and we wonder if that may become a hindrance in some applications given that Intel has moved over to 12 channels. Of course, AMD cannot readily move to a wider memory architecture without foregoing socket compatibility: all second-gen Epyc chips will work on present SP3-socket boards.

Notwithstanding Intel's 48-core announcement yesterday, AMD is upping its datacenter and HPC game with second-gen Epyc processors due to be made available in the first half of next year. Keeping the same socket support but doubling compute performance and, potentially, quadrupling floating-point capability.

AMD also demonstrated a single early-silicon Rome Epyc 64C128T chip beating dual Intel Xeon 8180M processors in the floating-point-intensive C-Ray test. You can't have an event without a benchmark or two, right?

There's no word on frequency or price at this moment - one would expect speeds to decrease with increased core density - yet the sheer compute and FP performance of next-gen AMD server processors appears, if you excuse the pun, Epyc.