At a special Data-Centric Innovation Day in Santa Clara, California, Intel has launched a wide range of products which are claimed to reflect its new data-centric strategy. These are products which help users process, move and store increasingly vast amounts of data, and support high growth workloads in the cloud, in AI, and 5G for example.

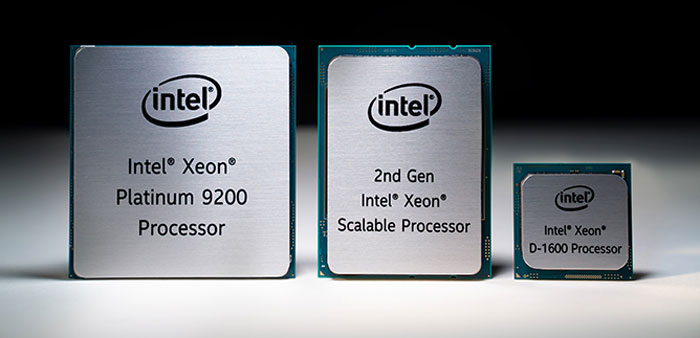

Intel's name was built on its computer processors, and despite all the talk of moving from PC-centric to data-centric strategies, the biggest announcement of the night was of a new clutch of CPUs, albeit aimed at corporate and enterprise servers. In total over 50 new processors were just announced including the headlining 2nd-Generation Intel Xeon Scalable CPUs, previously dubbed Cascade Lake. Additionally there were network-optimised Xeon processors, new Agilex FPGAs, and Xeon-D SoCs added to Intel's processor throng.

Of the 50 new 2nd-Generation Intel Xeon Scalable processors that have become available today, the flagship in undoubtedly the 56-core, 12 memory channel Intel Xeon Platinum 9200. Intel says that this processor is "designed to deliver leadership socket-level performance and unprecedented DDR memory bandwidth in a wide variety of high-performance computing (HPC) workloads, AI applications and high-density infrastructure". Compared to the previous gen these new Xeon Scalable chips are said to offer an average 1.33x performance gain.

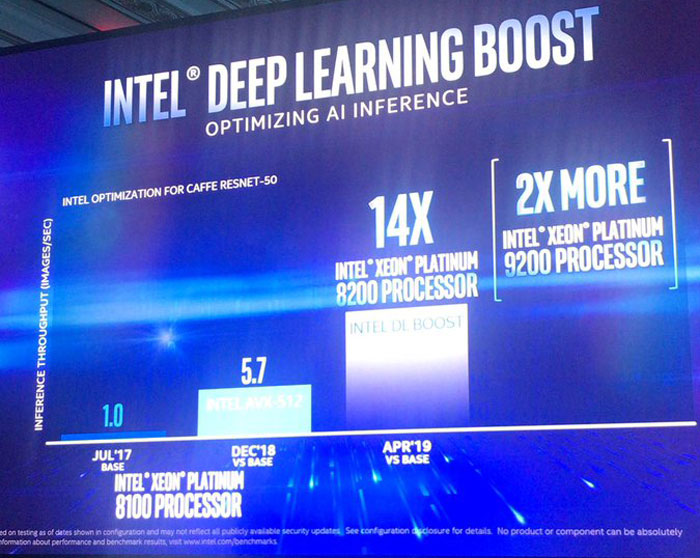

An interesting new feature in the 2nd-Generation Intel Xeon Scalable CPUs is the integration of Intel DL Boost (Intel Deep Learning Boost) technology. Intel has designed this processor extension to accelerate AI inference workloads like image-recognition, object-detection and image-segmentation within data centre, enterprise and intelligent-edge computing environments.

Backing up its inference processing credentials Intel says it has worked closely with partners and application makers to take full advantage of Intel DL Boost technology. Intel name-checked frameworks such as TensorFlow, PyTorch, Caffe, MXNet and Paddle Paddle.

Examples of the practical value of Intel DL Boost include the following; Microsoft has seen a 3.4x boost in image recognition performance, Target has witnessed a 4.4x boost in machine learning inference, and JD.com has seen a 2.4x boost in text recognition.

Another feather in the cap of the 2nd-Generation Intel Xeon Scalable processors is that they support Intel's Optane DC persistent memory, "which brings affordable high-capacity and persistence to Intel’s data-centric computing portfolio." With Intel Optane DC, 36TB system-level memory capacity is possible in an 8-socket system (3x more memory than the previous gen of Xeon Scalable processors).*

Other key attractions of the 2nd gen Intel Xeon Scalable processors are as follows:

- Intel Turbo Boost Technology 2.0 ramps up-to 4.4GHz, alongside memory subsystem enhancements with support for DDR4-2933MT/s and 16Gb DIMM densities.

- Intel Speed Select Technology provides enterprise and infrastructure-as-a-service providers more flexibility to address evolving workload needs.

- Enhanced Intel Infrastructure Management Technologies to enable increased utilization and workload optimization across data centre resources.

- New side-channel protections are directly incorporated into hardware.

Among the other chips announced tonight were the Agilex FPGAs, new 10nm Intel FPGAs that are built to deliver flexible hardware acceleration and application specific optimisation for edge computing, networking and data centres. Agilex FPGAs will become available from H2 2019. Last but not least Intel unveiled the Xeon D-1600 processor, described as a highly-integrated SoC designed for dense environments where power and space are limited, but per-core performance is essential.

*Above is an overview of Intel's processors that have been announced, just one part of the triple pronged series of announcements today – with regard to the moving, storing and processing of data. Coverage of the moving and storing products and innovations will be published in the follow-up article.