SoftBank-owned Arm continues to dominate the market for the architecture behind low-power, efficient CPUs powering the vast majority of smartphones on the market today. Though Arm doesn't build any products of its own, leaving this up to its wide range of partners, its Cortex line is omnipresent in silicon designs from all the leading players.

Last year Arm upped the performance ante by releasing the Cortex-A76 CPU, offering up to 35 per cent more performance over the previous generation, plus specific improvements for machine learning. Cortex-A76, then, did much to boost single-thread performance over its predecessor.

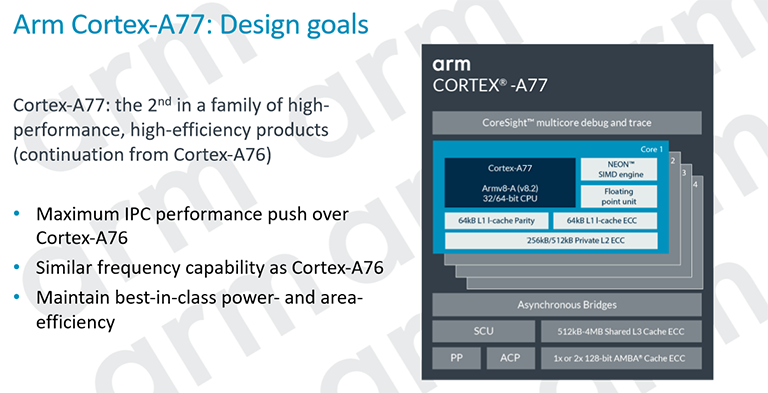

It's against that backdrop that, a year on, Arm is launching the new-and-improved Cortex-A77 processor. Ostensibly the same as its predecessor from a high-level perspective, Arm's engineers having tweaked the design significantly to increase the instructions-per-clock capability.

The aim, therefore, has been to increase performance, via IPC improvements, without driving up the key limiter: power. So how has Arm been able to claim handsome single-thread improvements without a massive overhaul of the design? Read on.

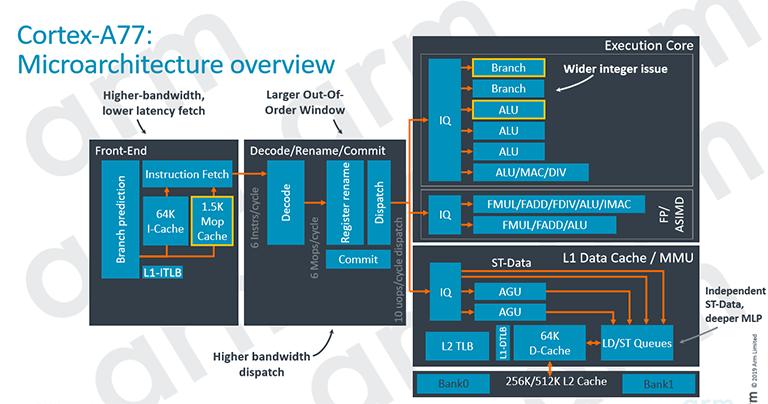

This block diagram highlights the similarities and architectural improvements over Cortex-A76. Let's take each in turn.

Spending time and resource on this front-end section improves the processor's ability to streamline the flow of instructions going into the fetch, decode and dispatch areas, enabling the processor to feed the execution beast, so to speak.

A great place to start is the branch-prediction unit (BPU), which, as the name suggests, tries to guess where a branch will go. The reason for branching is to keep the processor as busy as possible by executing instructions in a non-serial manner - out-of-order processing - so it's usual for architects to spend a lot of time and effort here. The trade-off of beefing-up the BPU is increased power and silicon area, so it's fine balancing act for a TDP-limited architecture such as Cortex-A77.

Predicting branches and using the MOP

However, because this new Cortex has a more powerful execution core - more on that in a bit - the BPU needs an upgrade, too. As such, Arm doubles the branch-prediction bandwidth, to 64 bytes per cycle, and therefore smooths any unwanted bubbles - where nothing happens during that cycle - from the core engine. There's also some secret sauce that reduces branch mispredicts. Remember, getting it wrong at this first stage means the entire pipeline needs to be flushed, which is costly from a power/performance point of view. In concert, Cortex-A77 has a larger branch target buffer (makes implicit sense), and much larger L1-BTB entry.

The interesting part of the front-end is the presence of the micro-op cache (shown as MOP), also present on the latest x86 processors from Intel and AMD. The MOP's objective is simple, to store/cache instructions as they're decoded. The benefit of this cache is that it enables the fetch part of the design, shown just above, to pull the instruction from the cache and save power by not having to figure out the required instructions from scratch. It also cuts a step off the pipeline, to 10 cycles, so it's a win-win all round.

Of course, pointing out the obvious, the micro-op cache works on instructions 'second time around' (because it's a stored cache) meaning that performance benefits won't be obvious on first-run instructions. Being included in the L1 i-cache, Arm reckons the MOP's 1.5K size (about 6K for regular instruction cache) has a hitrate of around 85 per cent across a wide range of workloads. A MOP cache makes huge sense for power-constrained environments that Arm typically designs for.

One question you might be asking yourself is why Arm didn't introduce a dedicated MOP cache in earlier architectures if it's effective at increasing performance whilst also potentially reducing power? The answer is two-fold: it's not a trivial undertaking and, secondly, the processor performance has to be strong enough to warrant such a cache. Cortex-A77 is the first that Arm deems good enough to more than benefit from the increase in overall silicon footprint.

Wide and smart

Moving along to decode, architecture aficionados will recognise that Arm boosts the dispatch bandwidth by 50 per cent, from four instructions per cycle through to six. That width is preserved through to register rename/dispatch and total dispatch to the execution core rises from eight on Cortex-A76 to 10 here. One needs a larger out-of-order window to accommodate such changes, so it jumps from 128 entries to 160, again feeding the execution beast.

This front-end and mid-part width is no good if you can't use it. Cortex-A77 brings with it a second brand and third arithmetic logic unit (ALU) in order to run more parallel instruction processing. On the top end of the execution core one is looking at a 50 per cent increase in processing - Cortex-A76 has a single branch, dual ALU, and a multi-purpose ALU, therefore missing out on two extra execution opportunities available here. Though not explicitly shown, Cortex-A77 has a second AES pipe, too. It's also worth pointing out that Cortex-A77 uses a unified instruction queue due to increased efficiency.

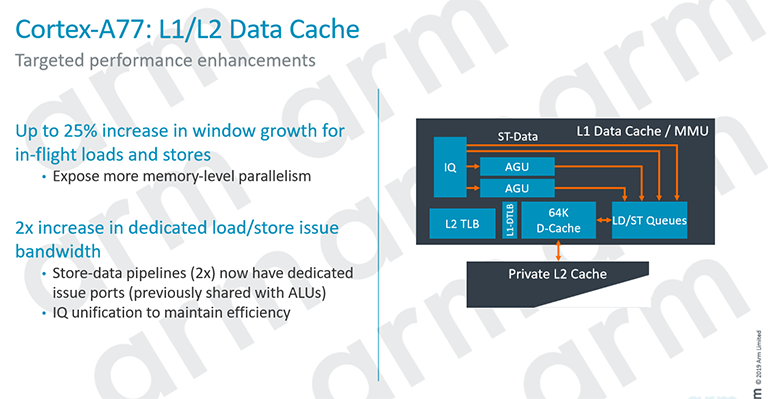

Another interesting departure is how the memory-management unit functions, and it's an area where Arm makes meaningful gains in performance. First off, compared to its predecessor, Cortex-A77 enables the store-date pipelines direct access to the issue queue - it's represented in the block diagram by the two topmost orange lines in the MMU. What this really means is extra bandwidth, which is always a good thing.

The load-store queue, as the name intimates, is responsible for loading and storing data to and from memory, so from a design point of view, anything that can be done to make this process more efficient pays handsome dividends. Arm uses what it calls a 'next-generation data prefetcher' that offers a multitude of benefits, including enabling higher bandwidth from main memory, reducing the need to go that far, and increasing power efficiency as a result.

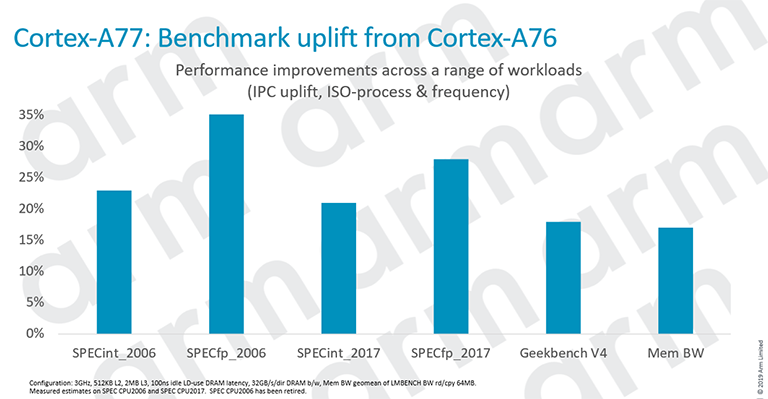

The sum of all of these architectural changes - a smarter front-end, wider dispatch and execution, plus smarter data prefetching - adds up to an average 20 per cent improvement when compared to Cortex-A76 on a level playing field.

One would expect a decent gain in integer-heavy benchmarking, but what's a tad surprising is the gain in floating-point performance, mostly down to dealing with memory far more cleverly. Sure, one can't take SPEC benchmarks as gospel - certain parts of the benchmarks run exceedingly well on Cortex-A77, perhaps too well, but the takeaway is that any smartphone equipped with this processor ought to offer an easy transition for the SoC designer and an extra dollop of performance in all scenarios.

Wrap

It will be up to the smartphone manufacturer to decide how many cores to lay down, at what speed, and so forth, though keeping an apples-to-apples comparison means next year's Cortex-toting hardware ought to remain competitive.

Arm made very healthy strides in performance when moving from Cortex-A75 to Cortex-A76. The newest iteration builds upon the foundations of the Cortex-A76 and enhances performance by making it a wider design... all without increasing the power or silicon footprint by a significant margin. The two standout features - MOP cache and a brand-new prefetcher - do the most to boost all-important IPC.

ARM has come out swinging with the new Cortex-A77 CPU core that'll inevitably replace the present Cortex-A76 as the go-to choice for a number of premium SoC designers. Better than its predecessor in every respect, and good enough, or so say the benchmarks, to be a true laptop-class processor, we wait with bated breath to see if Arm can finally crack the x86 stranglehold in that space.