Gigabyte's Aorus X570 overclocking guide PDF has been found to be an interesting source of pre-launch AMD Ryzen 9 3950X testing data. The linked document looks at the OC possibilities open to users of the Gigabyte X570 Aorus Master motherboard, with an AMD Ryzen 9 3950X processor installed, 16GB of DDR4 3200MHz RAM, and a liquid cooler with 360mm radiator.

In the document Gigabyte notes that the 3950X has a Max Boost frequency of 4.7GHz - but that figure applies to just two cores. It then walks users through pushing their mobo/CPU combo to achieve the highest stable speeds with all 16 cores processing. There are the usual warnings about warranties and overdoing voltage tweaking.

After various clock, voltage, and memory adjustments in the Aorus BIOS, in the dedicated 'Tweaker Tab', users are encouraged to go through stability checking and stress testing. Whether you achieve success or not you might want to revise the tweaks to be gentler or fiercer.

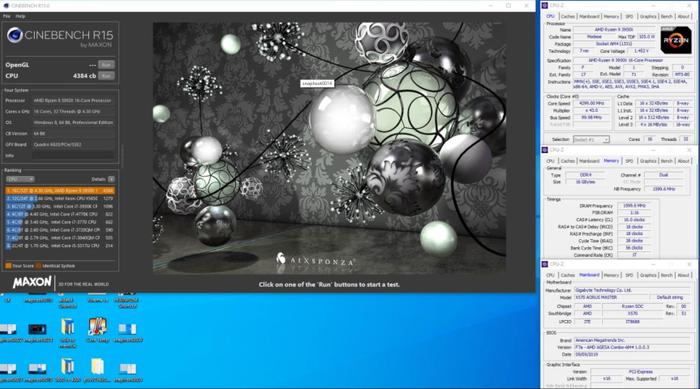

In Gigabyte's own tests it was found that "the AMD Ryzen 9 3950X can hit around 4.3GHz using around 1.4V Vcore". If you go to the end of the PDF, you will read that this is the limit for many samples Gigabyte tested but it did get one stable at 4.4GHz, and it shared a Cinebench R15 run at this speed (score 4475cb).

On the topic of Cinebench benchmarking, the 4.3GHz OC processor achieved 4384, which is a 452 point difference - better than 10 per cent - compared to stock. An Intel-based rival, such as the Core i9-9980XE achieves around 3,700 points in Cinebench R15.

Click to zoom image

Remember, the AMD Ryzen 9 3950X was due to have arrived in September but has been delayed to November for some reason or other. It will likely launch alongside the latest third gen Threadripper CPUs next month.