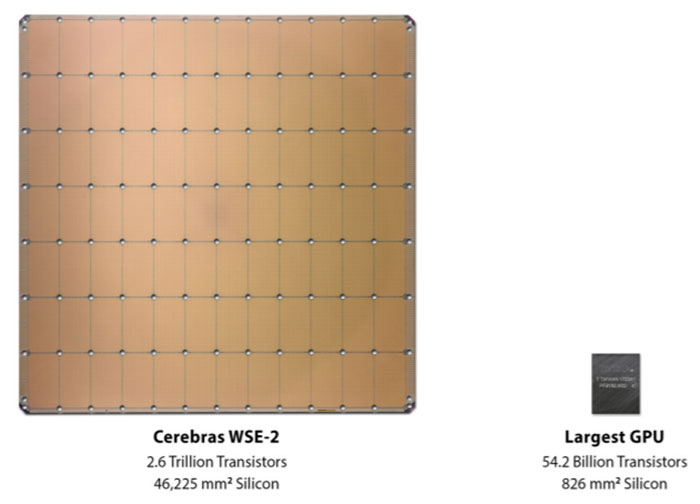

Cerebras astonished tech watchers back in August 2019 when it unveiled an AI processor dubbed the Wafer Scale Engine (WSE). While most chips are made from small portions of a 12-inch silicon wafer, this is a single chip interconnected on a single wafer. This meant that the WSE featured 1.2 trillion transistors, for 400,000 AI computing cores over its 21.5cm sq (8.5inch sq) area. The TSMC 16nm fabricated Cerebras WSE also featured 18GB of local superfast SRAM with 9 petabytes per second bandwidth. Compare that to Nvidia's largest GPU (the A100) with 'just' 54 billion transistors and 7,433 cores.

|

WSE |

WSE-2 |

Nvidia A100 |

|

|

Area |

46,255mm2 |

46,255mm2 |

826mm2 |

|

Transistors |

1.2 trillion |

2.6 trillion |

54.2 billion |

|

Cores |

400,000 |

850,000 |

6,912 + 432 |

|

On-chip memory |

18GB |

40GB |

40MB |

|

Memory bandwidth |

9PB/s |

20PB/s |

1,555GB/s |

|

Fabric bandwidth |

100Pb/s |

220Pb/s |

600GB/s |

|

Fabrication process |

16nm |

7nm |

7nm |

At Hot Chips in August 2020, Cerebras started to tease a second gen WSE, which would be fabricated by TSMC on its 7nm process. The revamped full wafer chip would pack 2.6 trillion transistors for 850,000 AI optimised cores the chip designers teased. Now the 7nm-based WSE-2 has been fully unveiled.

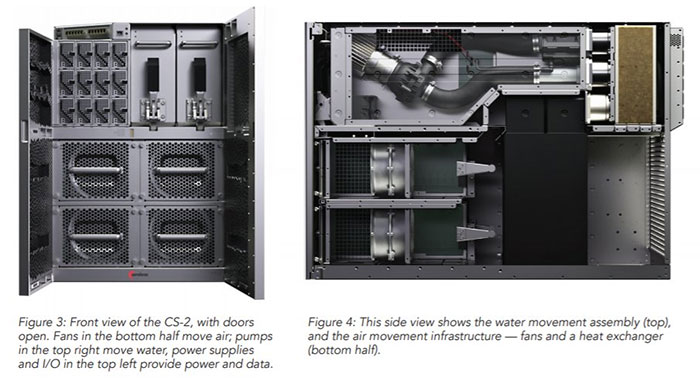

The WSE-2 will be the power behind the Cerebras CS-2, "the industry’s fastest AI computer, designed and optimized for 7nm and beyond". According to Cerebras the new AI computer will be more than twice as fast as the first-gen CS-1. "In AI compute, big chips are king, as they process information more quickly, producing answers in less time – and time is the enemy of progress in AI," explained Dhiraj Mallik, Vice President Hardware Engineering, Cerebras Systems. "The WSE-2 solves this major challenge as the industry’s fastest and largest AI processor ever made."

Performance comparisons claimed by Cerebras are that, depending upon workload, the CS-2 "delivers hundreds or thousands of times more performance than legacy alternatives". Moreover, it is said to do so while consuming a fraction of the power in a relatively small system form factor (26-inches tall). A single CS-2 can thus "replaces clusters of hundreds or thousands of graphics processing units (GPUs) that consume dozens of racks, use hundreds of kilowatts of power, and take months to configure and program," says Ceberas.

The CS-1 was deployed in the Argonne National Laboratory and by pharmaceuticals firm GSK previously. Representatives from both these organisations are looking forward to deliveries of CS-2 systems, as the CS-1 surpassed expectations in accelerating complex training, simulation, and prediction models.