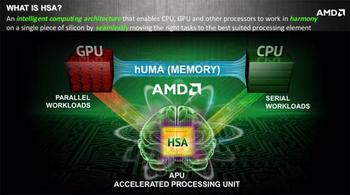

This morning AMD has released information detailing its upcoming heterogeneous Uniform Memory Access (hUMA) technology. This new APU and system memory tech is expected to bring both coding and execution efficiencies to AMD processors from Kaveri onwards. In a hUMA system the CPU, GPU and any other system processors can work harmoniously with a single pool of memory, tasks will be automatically allocated to the most suitable/efficient processor. However software has to be specially written to make use of these enhancements.

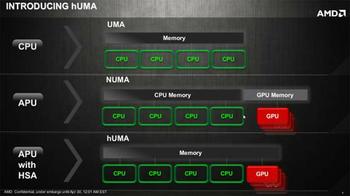

hUMA will allow the CPU and CPU components on an AMD APU to access the same system memory directly. This will eliminate the need to copy data to the GPU, which introduces latency into the system, and in turn can negate many of the GPU’s parallel processing benefits. The new system is best illustrated by the charts supplied in AMD’s presentation.

You can clearly see in the above graphical representation of the hUMA system that all the processors in AMD’s APU with HSA can access the same memory.

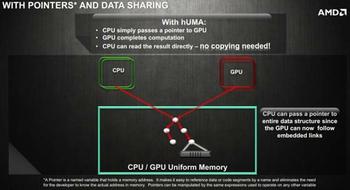

There are three key advantageous features to hUMA as detailed by AMD. Bi-Directional Coherent Memory allows all the processors to work together with one set of data. Pageable Memory allows the GPU to handle page faults just like the CPU does. Entire Memory Space – both the CPU and GPU will have access to the system RAM and entire virtual memory address space. The slide above shows how pointers and data sharing can work in a hUMA system.

As mentioned in the introduction, the first systems with hUMA are expected in the later half of this year thanks to Kaveri processors. Ars Technica points out that hUMA needs developers to write code specifically to take advantage of its enhancements. With games consoles like the PS4 and next Xbox expected to have AMD hUMA processors this could have a positive impact on development of software taking advantage of AMD’s new memory architecture on PCs.