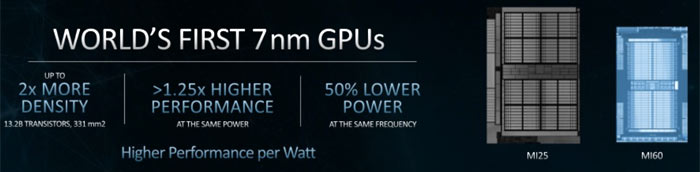

AMD held its Next Horizon event in San Francisco yesterday evening and you might already have digested the new concerning the 7nm Zen 2 based Epyc processors, written up by the HEXUS Editor. However, there were other interesting announcements by AMD at Next Horizon; it said that its Epyc Processors had become available on Amazon Web Services, and it revealed the world’s first 7nm data centre GPUs, based on the Vega architecture. We shall provide more info on the GPUs, the new AMD Radeon Instinct MI60 and MI50 accelerators, below.

“The fusion of human instinct and machine intelligence is here.”

AMD sums up its new 7nm GPU accelerator cards as follows - “AMD Radeon Instinct MI60 and MI50 accelerators with supercharged compute performance, high-speed connectivity, fast memory bandwidth and updated ROCm open software platform power the most demanding deep learning, HPC, cloud and rendering applications”.

Designed for data centre and running on Linux only, these GPUs provide the compute performance required for next-generation deep learning, HPC, cloud computing and rendering applications. For deep learning the flexible mixed-precision FP16, FP32 and INT4/INT8 capabilities of these GPUs will prove attractive thinks AMD. A particular claim to fame of the beefier AMD Radeon Instinct MI60 is that it is the world’s fastest double precision PCIe 4.0 capable accelerator, delivering up to 7.4 TFLOPS peak FP64 performance (and the MI50 can achieve up to 6.7 TFLOPS FP64 peak performance). This performance makes the new GPU accelerators an efficient, cost-effective solution for a variety of deep learning workloads. Furthermore, AMD has enabled high reuse in Virtual Desktop Infrastructure (VDI), Desktop-as-a-Service (DaaS) and cloud environments.

If you wish to virtualisation technology to share these GPU resources, AMD is keen to point out that its MxGPU Technology, a hardware-based GPU virtualization solution, hardens such systems against hackers, delivering an extra level of security for virtualised cloud deployments.

Fast data transfer in the target applications of these products is essential and AMD states that the Two Infinity Fabric Links per GPU deliver “up to 200GB/s of peer-to-peer bandwidth – up to 6X faster than PCIe 3.0 alone”. Up to 4 GPUs can be configured into a hive ring (2 hives in 8 GPU servers), adds AMD.

An Infinity Fabric ring with four Radeon Instinct MI50 cards

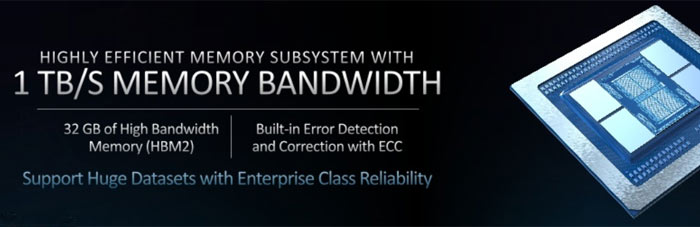

The AMD Radeon Instinct MI60 and MI50 accelerators come packing 32GB and 16GB of HBM2 ECC memory, respectively. AMD says that both GPU cards provide full-chip ECC and Reliability, Accessibility and Serviceability (RAS) technologies which are important to HPC deployments.

In terms of supporting software, AMD has updated its ROCm open software platform to ROCm 2.0. This new software supports 64-bit Linux operating systems including CentOS, RHEL and Ubuntu, includes updated libraries, and support for the latest deep learning frameworks such as TensorFlow 1.11, PyTorch (Caffe2), and others.

The MI60 will begin to ship to data centre customers by the end of this year, alongside the ROCm 2.0 release. Meanwhile, the MI50 won’t become available until the end of Q1 2019. Pricing indications were not provided by AMD.