AMD is putting some more effort into extolling the virtues of its FreeSync technology. Yesterday the firm shared a new blog post, a new video, and information about a new demo that will showcase its technology.

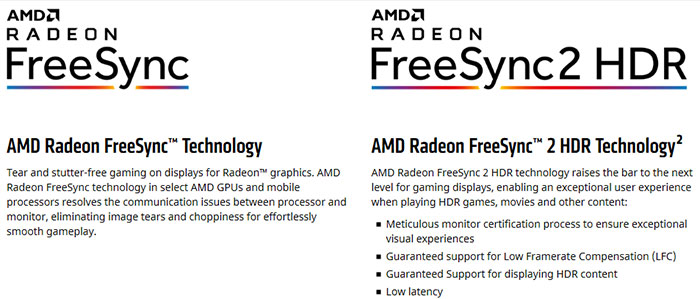

First of all, the chip designer has decided that the FreeSync 2 brand doesn't really communicate what is on offer. In brief, FreeSync 2 rolls together the original FreeSync technology, for wide variable refresh rate range and low framerate compensation (LFC), with HDR visual rendering. Thus it has been renamed FreeSync 2 HDR - to help people be immediately aware of the advantages.

AMD says that 9 out of 10 gaming monitors with GPU adaptive sync technology are based on its Radeon FreeSync technology. However, FreeSync 2 (now FreeSync 2 HDR) is only supported by 11 monitors released so far, but the number is growing. AMD intends to make FreeSync monitors stand out more in retail stores but hasn't revealed its plans for doing this so far - though it hints it might be encouraging/facilitating in-store side-by-side quality comparison demos.

For those wishing to provide vivid comparisons between FreeSync on and off and HDR vs SDR visuals, AMD is close to releasing a new demo. AMD's Radeon FreeSync 2 HDR Oasis Demo is built using Unreal Engine 4 and the display is easily toggled to show a modern game engine running with and without FreeSync and switching between SDR and HDR. The demo will allow you to twiddle with FreeSync 2 HDR transport protocols too - for forward/reverse, panning speed control, as well as being able to tweak various other typical game visual settings.

Today we can see the Oasis Demo video, but the software to install on your PC is yet to be available for public download. When it does become available the install files will be an approx 6GB download.