PC enthusiasts and gamers are eager to learn more about the next generation consumer graphics cards from Nvidia. Many have already built up their hopes based upon official green team boasts, and various leaks, about the performance of Ampere GPUs. Those information nuggets are still rather thin on the ground though, so the publishing of OptaneBench results from an Nvidia Ampere A100 GPU system user ahead of the weekend was very welcome.

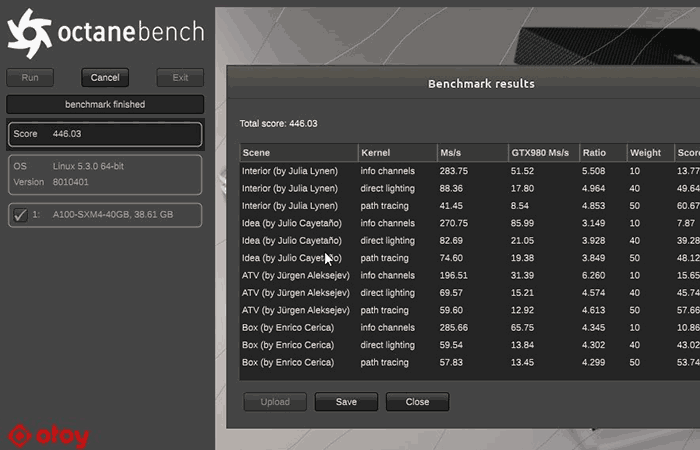

Jules Urbach, CEO of OTOY, the developer and maker of OctaneRender software, shared the Nvidia Ampere A100 GPU benchmark scores on Friday. OctaneRender, and thus OptaneBench, only works on Nvidia CUDA platforms but it is useful for comparing different generation and model GPUs form the green team.

So, what did Urbach find? The Nvidia Ampere A100 GPU scored 446 points in OctaneBench OB4. From this headline result Urbach exclaimed that "Ampere appears to be ~43% faster than Turing in OctaneRender - even w/ RTX off!"

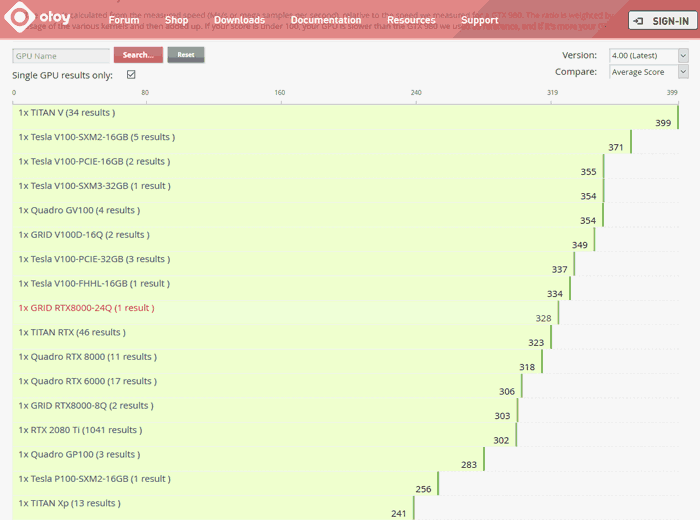

If you look at the OB4 results chart, partly reproduced above, you will see that the best consumer Turing graphics card, the GeForce RTX 2080 Ti, scores 302 points. This is the likely reference for the claim but the A100 provides a 47 rather than 43 per cent performance boost. Actually, the best performing Turing GPU in the results table is the Grid RTX 8000-24Q, scoring 328 points.

In the current OB4 results table you can see Nvidia Volta results take the top places with this GPU architecture behind the leading Titan V, Tesla V100, and Quadro GV100 accelerators. The Nvidia Ampere A100 GPU is still ahead of these, but only by between 11 to 33 per cent.

As a reminder, the Nvidia Ampere A100 GPU is built on TSMC 7nm with 3D stacking, it contains 54 billion transistors, 3rd gen Tensor cores, 3rd gen NVLink and NVSwitch, , supports Multi-instance GPU (MIG), and is paired with 40GB of super-fast HBM2e memory.

Source: Jules Urbach via VideoCardz.