Nvidia went official with its GeForce MX450 GPU for ultraportable laptops late last month. However, we were a bit disappointed that the tech specs page was rather lacking in details. Such omissions lead to head scratching and uncertainty. Making the upgrade even less persuasive, Nvidia didn't even give the new part one of its 'GeForce Performance Score' ratings.

GeForce MX 450, vs MX 350 (right).

At the time of the official unveiling there was talk that the GeForce MX450 was a significant step up from the MX 350 as it would be based upon the Turing TU117 chip, better known as the GPU in the GeForce GTX 1650 graphics cards. It was thought that this GPU was utilised but with greatly reduced power consumption by Nvidia reducing back base/boost clocks, the memory bus width, or permutations of these and other measures.

Now, it looks like devices featuring the MX450 have started to be distributed in China, as Zhuanlan (Chinese) has published a review of a Lenovo device featuring this GPU, including comparisons with the MX350, Vega 8 (Ryzen 9 4900H), and Iris Plus Graphics G7 (Core i7-1065G7), among others.

Click to zoom synthetic benchmarks

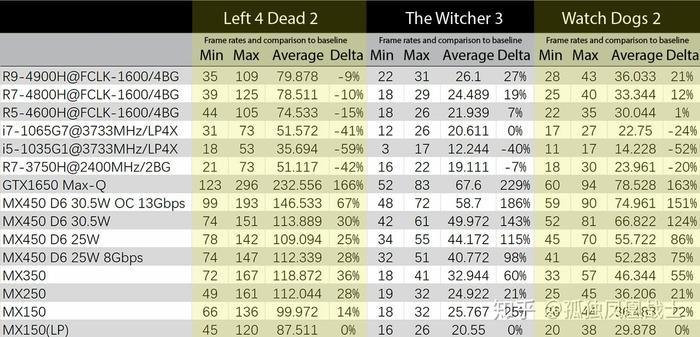

Before going into benchmarks the testers checked out the GeForce MX450 in GPU-Z and NV-Inspector. These apps reveal the MX450 used by Lenovo features a 28.5W rated GPU with a TU117 GPU possessing 896 SPs running at a boost of 1.575GHz, and featuring 2GB of GDDR6 memory on a 64-bit memory bus. As NotebookCheck sums up, this chip delivers about a 33.5 per cent performance boost in gaming compared to the MX 350. On the flip-side, the MX450 is about 37 per cent slower than the TU117 packing GTX 1650 due to reduced memory bandwidth (64- vs 128-bit bus) and fewer ROPs (16 vs 32). It is observed that overclocking the memory gives the MX450 a good performance hike.

Click to zoom gaming benchmarks

The MX450 might appeal to systems makers as it allows them to reuse designs which usually come with GTX 1650 and better GPUs. Zhuanlan observes that the MX450 in the Lenovo test machine did have higher power consumption than the GTX 1650 Max-Q though. In The Witcher 3, for example, the MX450's average power consumption was between 30.5 and 31.3W which exceeds that of the GTX 1650 Max-Q (28.5 W). Meanwhile, if a systems maker wants to reuse a board which previously housed a MX150/MX250/MX350, it can opt for the 25W version of the MX450 with GDDR5 memory support.

Source: Zhuanlan (Chinese), via NotebookCheck.