In recent HEXUS news we have seen two laptops launched sporting what is referred to by the system maker as "Intel Discrete Graphics" (Asus VivoBook Flip 14), or "the new Intel Iris Xe MAX discrete graphics" (Acer Swift 3X, scroll down to end of linked story). To be clear, what Asus and Acer are referring to is the discrete Intel GPU with codename DG1. Anandtech confirms DG1 's commercial product name is 'Iris Xe Max'. Intel says we should expect Iris Xe Max laptops to become available very shortly.

As we wait for the first Intel Iris Xe Max laptops to become available, it is natural to wonder what kind of performance users should expect from this brand new discrete GPU. It looks like some users and testers have started to get their hands on devices packing this GPU as Twitter tech leakster Tum Apisak has recently uncovered some Geekbench 5 data about the Intel Iris Xe Max.

The data appears to reveal that the new Intel GPU has 96 execution units (for 768 shader cores) with a 1.5GHz clock speed, and partnered with 3GB of memory. The two GB5 OpenCL scores unearthed by Tum - 11885 and 11857 - sit somewhere between typical Nvidia GeForce MX330 and MX350 results (Open CL score of approx 11,100 and 13,800 respectively). Another neighbouring discrete mobile GPU is the AMD Radeon RX 550X, notes Tom's Hardware.

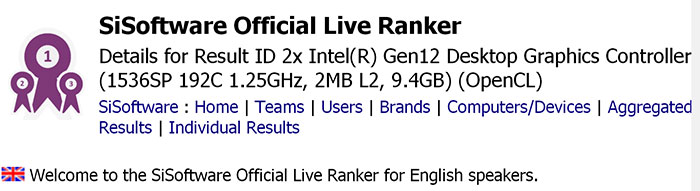

While the Iris Xe Max working on its own is pretty much on a par with its rivals and doesn't have any particular appeal on the surface, it is thought that it will be able to stand out working in multi-GPU mode with the CPU's onboard Xe graphics. Perhaps we will see this multi-GPU performance boost evidenced when systems ship with final drivers. A previous SiSoft Sandra database entry was spotted by Tum Apisak, showing that some apps do see these new systems as multi-GPU systems, as you can see below.

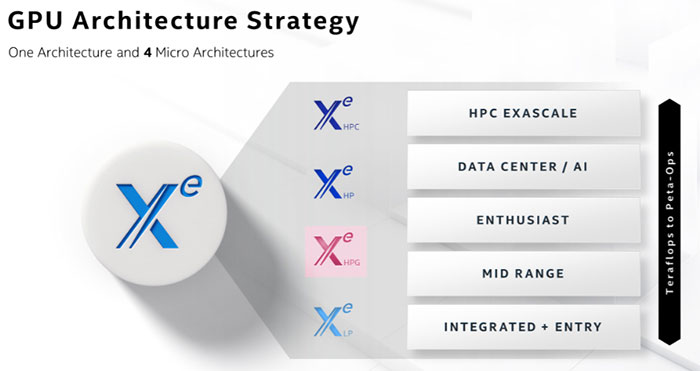

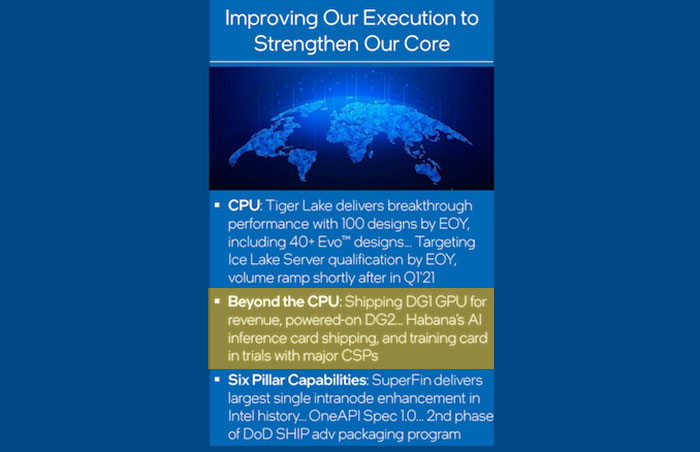

In the wake of its recent financial results release Intel confirmed that it had successfully powered on DG2 silicon, as you can see from the top image, which also confirms shipments of DG1. The new DG2 is based upon the upcoming Intel Xe-HPG architecture, and the company says that DG2 will "take our discrete graphics capability up the stack into the enthusiast segment."