Overclocking expert der8auer has shared a new video which takes a deep dive into the power consumption of the AMD X570 chipset (German language version here). This is a bit of a hot topic with enthusiasts as AMD and its motherboard partners have gone against the grain to equip active fan cooled chipsets on almost all the motherboards we have seen become available thus far.

In the HEXUS editor's study of the X570 chipset, published on Sunday, it was observed that "X570 is clearly superior to X470 in every way other than power". We hear that it uses 13W at most, when put under the cosh, double the power consumption of X470 - a necessary 'evil' one might conclude. Most assumed the extra power was needed when it is pushed by the demands of new super-fast PCIe 4.0 devices (a major difference from X470). However, der8auer's initial testing shows that using PCIe 4.0 peripherals under stress doesn't make much of a difference to power consumption…

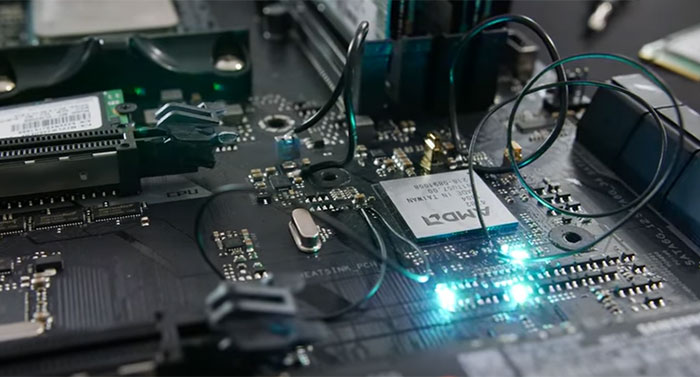

At the start of the video der8auer explains the difficulties in monitoring chipset power consumption - it isn't like a peripheral hanging off a single connector, there are multiple connections to the PSU on both sides of the motherboard. After some deft soldering of wires to strategic motherboard power transfer components he managed to get a bunch of spaghetti that could be monitored, added and converted to provide a chipset power consumption figure.

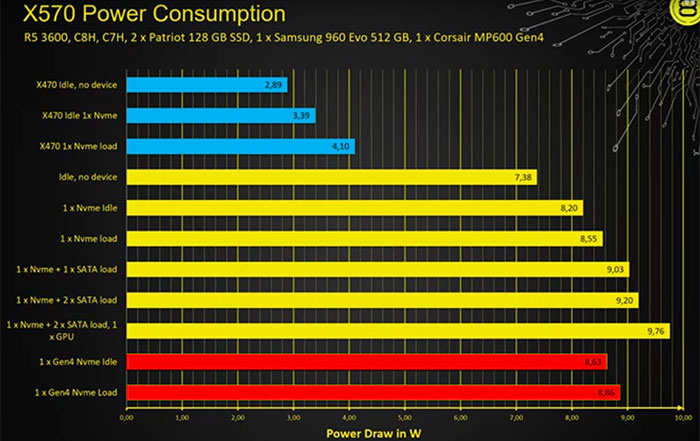

Moving on in the video to about 10mins 30s, der8auer says he spent a couple of weeks testing the X570 chipset against the X470 chipset, and he has some interesting tables to share. The main results table is reproduced below and you can see the top three (blue results) are from an X470 idle, with 1 fast NVMe SSD attached, and then with that SSD under load (running CrystalDiskMark).

You can see that the X570 power consumption starts from a much higher base, idling at 7.35W with no NVMe drive attached. The yellow highlighted bars show max power usage der8auer observed with various combinations of NVMe Gen 3 and SATA drives, and one included a PCI graphics card running the FurMark benchmark too. Finally the bottom two results, in red, show power consumption when there is a Corsair NVMe gen 4 SSD attached, and in/active. Der8auer is surprised by how little difference there is between NVMe Gen 3 and 4 under load in terms of power consumption "because everybody kept telling us (at Computex) that the chipset has much higher power consumption because of PCI Express Gen 4." In summary the OC expert couldn't pin down why the chipset requires so much extra power in actuality. Hopefully things will become clearer as boards are tested by more tech review sites like ourselves, it is still early days.

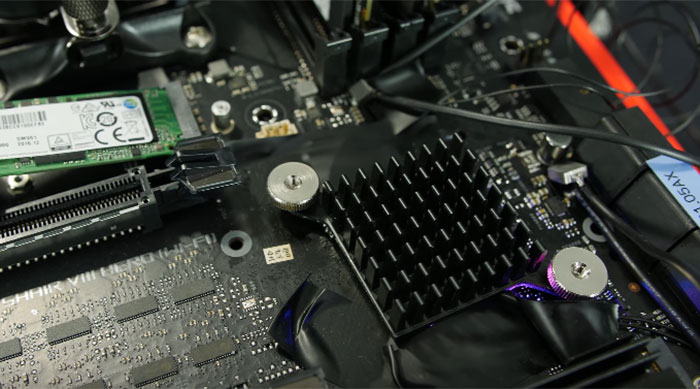

Last but not least, der8auers says he "really have no idea what is going on with these (X570) cooling solutions". He says that he managed to raise chipset power consumption in his testing to near 10W by attaching multiple devices and stressing them all. Then, with "almost no airflow" and a tiny chipset heatsink (pictured above) sat replacing the active fan cooler, the temps peaked at 74°C.

Please stay tuned for several X570 chipset motherboard reviews that we have in the pipeline at HEXUS.