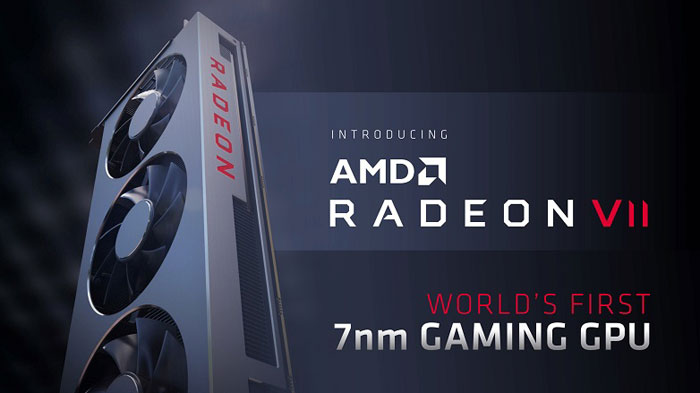

Earlier this week some sites shared rumours that AMD Radeon VII graphics cards would be in short supply. These concerns seem to have a common source: TweakTown's exclusive report, citing industry contacts, which stated that there would be "less than 5000" 7nm Vega 20 GU packing, 300W, 16GB HBM2 made. Furthermore, TweakTown went on to say that there would be "no custom Radeon VII graphics cards made," by AiBs but the source wasn't so certain about this assertion.

AMD has since officially responded to the 'short supply' aspersions. ExtremeTech, part of ZDNet, says it has got a statement from AMD that addresses the rumours touched upon in the intro, regarding the Radeon VII. I've reproduced the full but brief statement below:

"While we don't report on production numbers externally, we will have products available via AIB partners and AMD.com at launch of Feb. 7, and we expect Radeon VII supply to meet demand from gamers."

Admittedly AMD's statement is a rather weak rebuffal. The AiB Radeon VII cards are expected to be simply partner-branded AMD reference designs, and AMD doesn't really deny the quantities that will be available. It just asserts there will be enough.

Shortly after its big reveal we got to see quite a few more gaming benchmarks for the AMD Radeon VII. However, despite the lack of product and third part testing results available, Nvidia CEO Jensen Huang, claimed that the rival red team's new hope offered "lousy" performance and added that "we'll crush it".

AMD's new Radeon VII will become available from 7th Feb, priced at $699.