Late last month the official YouTube blog was updated with a low key article about how its developers are "continuing our work to improve recommendations on YouTube." The piece was a classic positive spin from Google on something that seems to have very negatively affected several individuals; being drawn down a rabbit hole of content which could create various flavours of extremists, conspiracy theorists, anti-vaxxers, and so on. The YouTube blog touches upon this thorny topic in its centre but starts by saying it hopes to do things like "help users find a new song to fall in love with".

The key change being implemented by YouTube this year is in the way it "can reduce the spread of content that comes close to - but doesn’t quite cross the line of - violating our Community Guidelines". Content that "could misinform users in harmful ways," will find its influence reduced. Videos "promoting a phony miracle cure for a serious illness, claiming the earth is flat, or making blatantly false claims about historic events like 9/11," will be affected by the tweaked recommendation AI, we are told.

YouTube is clear that it won't be deleting these videos, as long as they comply with Community Guidelines. Furthermore, such borderline videos will still be featured for users that have the source channels in their subscriptions.

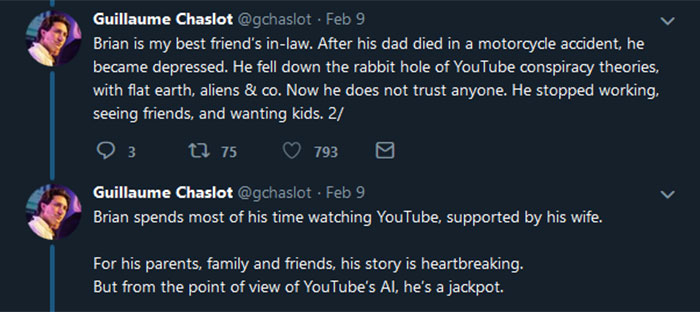

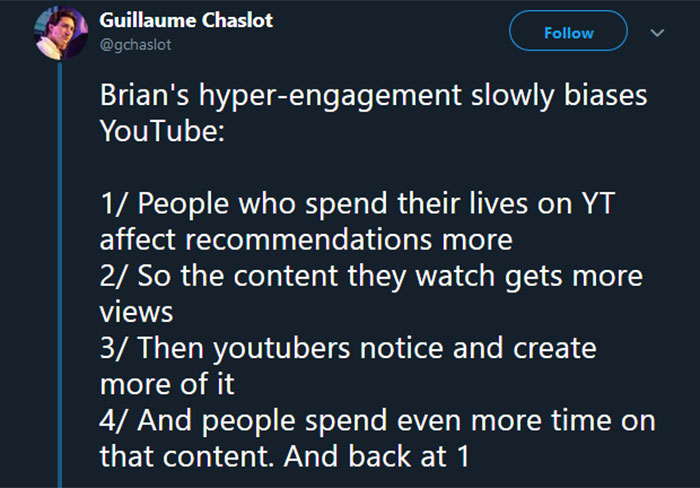

At the weekend former Google engineer Guillaume Chaslot admitted that he helped to build the current AI used to promote recommended videos on YouTube. In a thread of Tweets, Chaslot described the impending changes as a "historic victory". His opinion comes from seeing and hearing of people falling down the "rabbit hole of YouTube conspiracy theories, with flat earth, aliens & co".

In Chaslot's personal circle 'Brian' has basically withdrawn from society due to YouTube conspiracy theory promotion. However, the acquaintance will have been seen by YouTube's AI as a 'jackpot', as it tuned his video recommendations to inspire hyper-engagement. More views is more ad dollars for YouTube, and seeing the 'success' with Brian the YouTube AI would try and replicate this engagement pattern with others.

A particularly badly affected Seattle man ended up killing his brother believing he was a lizard, and his public YouTube likes are available to browse, giving an indication of his journey down the rabbit hole.

Chaslot asserts that the YouTube AI change will "save thousands". Above are a couple of extreme examples, but they are likely the tip of the iceberg of people who believe in conspiracies and alternative facts without critical thinking, perhaps due to being fed these videos by YouTube and other social media companies.

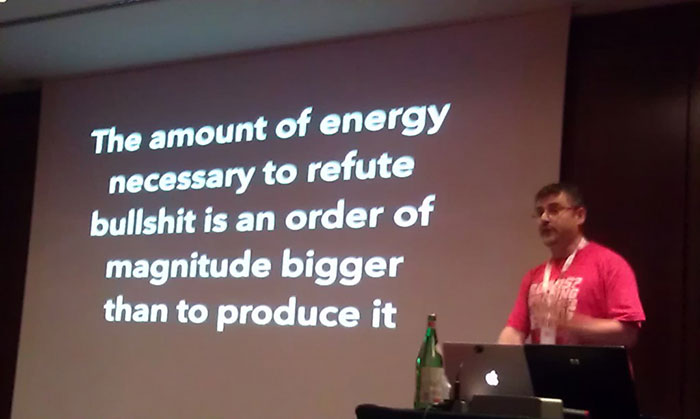

Unfortunately there is an asymmetry with BS, as noted by Alberto Brandolini, pictured above.