Tobii, best known for its eye tracking technologies and devices used for enhanced Windows gaming, has launched Tobii Spotlight Technology. The firm took the wraps off the technology at SIGGRAPH last week. In brief, Tobii is leveraging its eye tracking tech to make foveated rendering techniques, such as VRS, smarter and more efficient.

HEXUS regulars will be aware that Nvidia introduced VRS (Variable-Rate Shading) with the launch of the Turing architecture in September last year. At the time the HEXUS editor thought it would be a highly suitable technique for VR rendering as it can provide high res imagery where needed, and lower res imagery at the periphery of scenes. Thus it can help deliver better visuals, and faster frame rates, with less stress on the GPU. AMD GPUs don't yet support VRS but support is thought to be in the pipeline.

Reading through the above paragraph, you will see a case for using VRS for rendering to VR headsets but it suffers from a startling lack of refinement - as it isn't just a user's head that moves in VR, our eyes move around too. This is where Tobii Spotlight Technology will make a difference. "Tobii Spotlight Technology is advanced eye tracking specialised for foveation," said Henrik Eskilsson, CEO of Tobii. In case you don't already know, the fovea is a small central portion of your retina, which sees in high resolution, while your peripheral vision is effectively a blur. Tobii has published a dedicated foveation technology page to explain why dynamic foveated rendering (DFR) is an important advance on VRS.

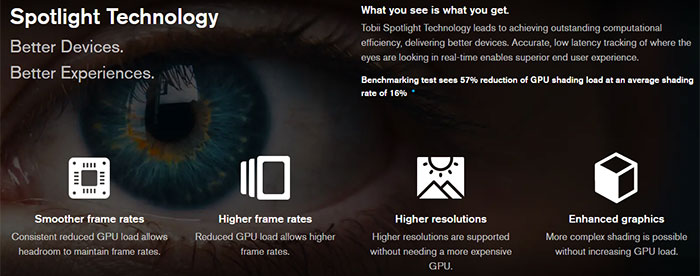

At SIGGRAPH Tobii showed off a PC system using its Spotlight technology as well as an Nvidia RTX 2070 graphics card (with DFR enabled via Nvidia VRS), an HTC Vive Pro Eye headset, playing the game ShowdownVR from Epic Games. Benchmarks using this setup delivered GPU rendering load reductions of 57 per cent, bringing the average shading rate using dynamic foveated rendering down to 16 per cent from around 24 per cent. Thus the GPU can work on other aspects of the game, and/or boost frame rates, or save power.

Tobii worked closely with Nvidia to implement its DFR system and sees it in the future being used to custom transfer and stream data optimised for user focus over 5G networks. The firm even expects its Spotlight technology to be able to keep shading tasks manageable in VR headsets with resolutions exceeding 15K.