We have talked in quite some depth about the progress of Nvidia DLSS and the emergence of AMD FidelityFX Super Resolution (FSR). The former caused such a splash that the editor tested it when it was box fresh, talking through the technology, the modes on offer, and observed performance implications on both AMD and Nvidia GPUs in various games / screen resolutions. You can read his conclusions of the tech as it worked on day 1, here.

Several other tech sites, tech forums, and YouTubers have spent considerable time weighing up the respective merits of DLSS and FSR. The problem, so far, is that no games support both DLSS and FSR, so it has not been possible to compare the technologies directly in a practical example – a shipping game. Usually reviewers just compare the various levels of DLSS/FSR quality with native. Sometimes it is compared to a game's built in resolution scaling settings too.

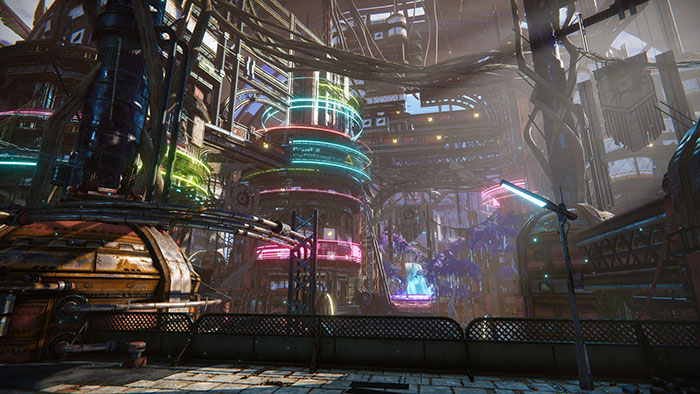

Two games on the list of upcoming AMD FSR titles, which also support Nvidia DLSS, are Edge of Eternity and Necromunda: Hired Gun. The developers of the former have just completed their FSR implementation and shared some thoughts on the upscaling tech comparison with WCCF Tech.

One of the first questions asked by WCCF Tech's Alessio Palumbo regarded the DLSS/FSR comparison in terms of its implementation in the Unity-engine game. The devs said in response that "implementing DLSS was quite complex to integrate into Unity for a small studio". In contrast, adding FSR was "very easy… it only took me a few hours". It was noted, however, that FSR worked much better with a change from the built-in Unity TAA with the open source AMD Cauldron TAA. Thus, while FSR is very easy to implement, it does require some thought to get the most out of it – something that is likely beyond user-tweaking like the recent GTA 5 with FSR mod.

The second question from WCCF Tech concerned the observed quality difference in using the DLSS/FSR implementations. Importantly, the dev said that "both technologies give amazing results, and I have a hard time seeing differences between them." In some further preference detail, the dev said that he would prefer to use FSR on 4K monitors, but with 1080p or even 720p gaming that DLSS "gives a better result". Interestingly, the developers have tested the GPD Win3 (with Intel APU) using FSR to upscale to 720p and claim that the result was very similar to native gameplay but at a smooth 60fps.

Going forward, the Edge of Eternity developers reckon that FSR is going to be more commonly integrated in upcoming games on PC and consoles, while DLSS might find more favour with big studios and AAA games titles.